In the AI/ML time, having a RAG system has a lot of advantages. RAG, or Retrieval Augmented Generation, is a technique that combines the capabilities of a pre-trained large language model with an external data source. This allows us to use the power of the LLMs like GPT-3, GPT-4 or even some of the freely available models like Llama to search and respond back with nuanced responses. An LLM understands human language, however, it is not trained on specialised datasets. For example, it won’t understand who Amitav Roy is and what he does. So, if we want to empower our LLM to respond to such questions, we would need a RAG system.

The flow

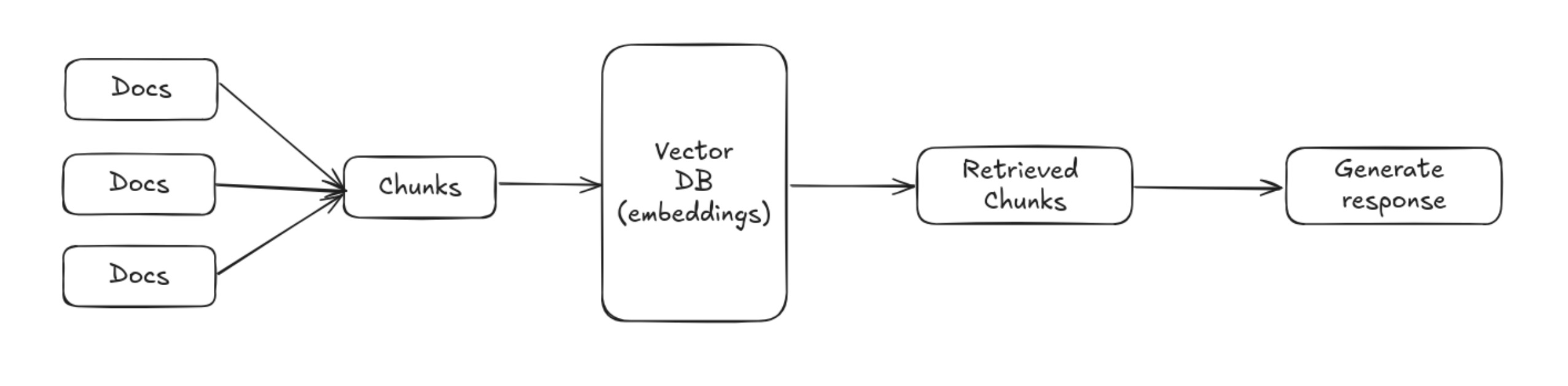

To understand the workings of an RAG system, we can look at the diagram below which explains the flow.

To separate the concerts of this entire system, we will have one part which is responsible for getting the docs, splitting them into chunks, and storing them in the vector db in the form of embeddings. Once this is done, we move to the second part where the LLM will try to generate a response by retrieving the chunks and searching the vector database.

Deep dive

The first thing that we need to do is correct data. The docs are where we are collecting information. And, this can be in different forms - like web URLs, PDFs, CSVs, etc.

Langchain provides a lot of document loaders which allows us to extract content from different mediums. In this example, we are going to fetch information from URLs and we will use “WebBaseLoader” along with “beautifulsoup4” to extract text from a website.

Loading data using Webloader is very easy. I just need to add these lines:

So, you can see that WebBaseLoader is imported from the langchain_community package. And then we pass a URL to the WebBasedLoader instance and then we call the load method. The beautiful of Langchain is that the framework gives you a lot of other loaders which has very similar APIs.

Chunking

Chunking of data is a very important step. Most language models, including transformer-based models like GPT, have a maximum token limit (context window). If a document exceeds this limit, it cannot be processed in a single pass. Chunking the document into smaller parts ensures that each chunk fits within the model's context window.

Also, if we have very large chunk of data - it will mean that the model will get a lot of unnecessary information and hence the response will not be very accurate. Splitting the document into right chunk ensures that we are able to send useful context for response. The storage and retrieval process is much more efficient and many other things.

The code for chunking the text is quite straightforward.

Embeddings & Chroma DB

To store the text in a way that the LLMs can search them and use them as context, we need to convert the text into embeddings. This is the format that the models understand. And, Chroma DB is one popular vector database that we will use.

It allows us not only to store the embeddings but also search for them based on the questions that let’s say our RAG is trying to answer.

With the split documents that we got from the text splitter, we pass that to the Chroma DB. We also need to mention the embeddings that we need to use. And, before saving the data Chroma DB will embed the information and then persist.

Here is the entire code that is responsible for scraping the information, splitting it, and then storing it in the Chroma DB.

Getting the answer

The part where we want to get the answer is very straightforward. We need to get the question text and convert it into an embedding. And then, search in our database to find similar chunks. Once we get similar chunks we pass them to our LLM as context. And, using that context the LLM will generate a response for us.

Below is the entire code for the code responsible for answering the question.

Powering through Flask API

Now, the above two code samples can be executed for results. However, I build the RAG for a chat bot and so I created APIs for the two actions using Flask.

Below is my main app.py file which is the entry point.

With this, you should be able to scrape URLs and then ask questions based on the information provided in those URLs.

Conclusion

There are lot of ways we can use RAG systems and this is just the first step. I am really excite about this and I tend to use it now for many things. Let me know what you think about this setup and how you use RAGs.

By the way, if you are a visual learner then I have a complete video on Youtube explaining each and every step. You can find the video here: youtube link. And, you can also refer to the Github repository where I have the code: git repo

Transforming ideas into impactful solutions, one project at a time. For me, software engineering isn't just about writing code; it's about building tools that make lives better.